Hello ladies and gentlemen, in this article I want to tell you about how I block harmful bots like ahfers, Semrush ,PetalBot, Majestic ,Megaindex and many others. In general, bots that are actively poking around the site cause a lot of problems. And at a certain point start eating up a huge amount of resources of the server your site is hosted on.

Bots need to be blocked

Not only that bots create an additional colossal load on the server, which will cost additional resources for it. Well, or into a more expensive hosting plan.

But there’s a bigger problem: bots collect information about you. Somebody SemRush, Majestic or LinkPad Will provide a lot of information about your site for competitors. About the satellite sites With the help of which you are promoting, traffic, link profile and all the anchors with which you are promoting.

In general, thanks to the information that these services collect, your site is in the palm of your competitors’ hands. So bots need to be blocked, especially if you have a commercial project.

But just know that the older your site is and more visible on the web, the more bots will come to it. At first, their numbers will be small and the load created will not be a problem.

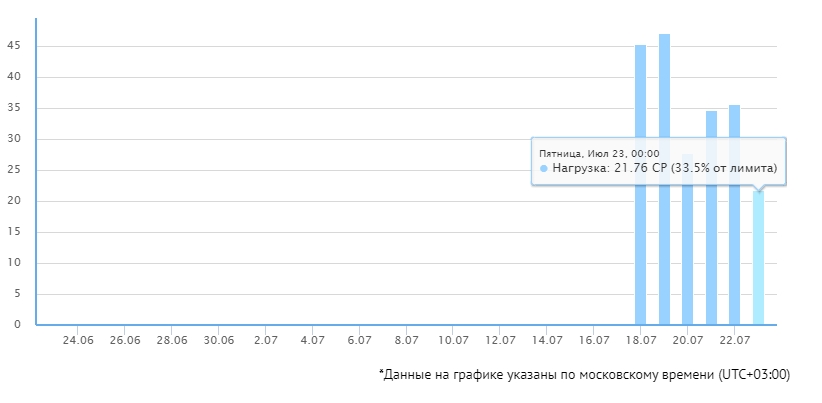

Eventually there will be a lot of bots, hosting or server resources will not be enough. Banal, a situation like this arose on my site. I even had to move the site to a different hosting to have enough resources, but even there the resources were almost down to nothing.

Then I decided to not be lazy and block the bad bots. Fished out those who visited my site for a few days, then blocked through .htaccess.

The load on the server has dropped very noticeably. Of course, 20-30% – the difference is not the biggest, but the larger the site, the more bots on it will be, respectively, these 20-30% difference will turn into overpayments for hosting or VPS.

Sure, the result may not seem the most impressive, but it was worth it. Now I don’t need to buy additional CP or switch to a more expensive tariff, accordingly, a simple bot block saves money.

I encourage you to do the same.

How to block bad bots

Let’s divide the bots into two parts:

Useful. Bots of search engines and their services. For example, YandexBot, GoogleImage. You can’t block them, it will have a bad effect on your search engine positions.

Bad bots. Different services like SemRush, Ahfers, Megaindex etc. In general, these are the ones we will cut off.

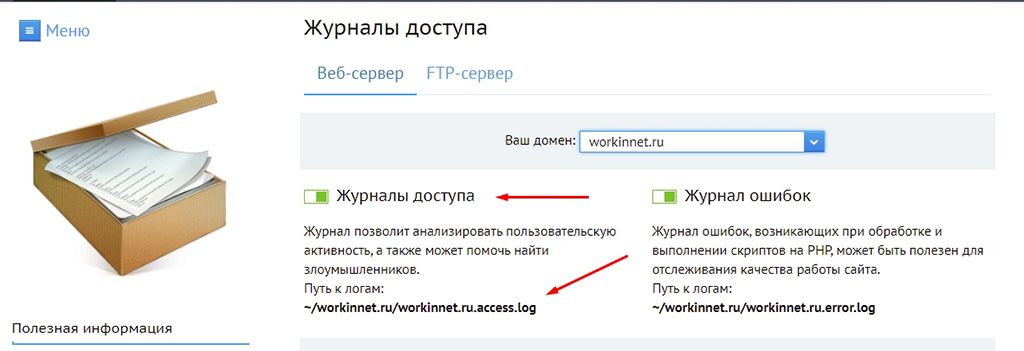

After blocking all sorts of bad bots, your server load will drop significantly. If you receive notifications from your hoster that the process limit has been exceededоof time (CP), then it’s time to cut off some of the bad bots. What kind of roworks and crawlers specifically increase the load, you can see in the logs.

Find the logic canbut on a server, visitor logs are usually written to separate logs. For example, in Fozzy you can see them in the “Statistics” section of the DirectAdmin, on Beget you will have to separately enable “Access Logs” in the hosting control panel. In general, if you don’t know how to do it yourself, ask your hoster, they will help you with it.

After that you will see a picture like this, where in the logs will be written a bunch of User-Agent, various IPS, and the time of the visit.

I don’t recommend mindlessly blocking everything. You need to isolate the bot, if you block all the “user-agents”, you will block the real visitors.

A lot of bots identify themselves. They’re the easiest to weed out.

Some bots don’t identify themselves or even masquerade as crawlers. You can cut them off by IP address, but this is practically useless, as malicious robots have constantly changing IP addresses.

You can also set up filtering by means of reverse DNS queries, but this is not an easy task, so in this article we will limit ourselves to blocking identifiable bots, as well as the most “brazen” IP addresses.

Of course, I’ve published a list of helpful and harmful bots . To make it clear what and why I’m blocking. I will be updating the lists periodically, as well as editing this article. But that article only applies to bots that can be identified.

Let’s start with the file lock option .htaccess file, because it is the most efficient.

Blocking bots via .htaccess

This option is the best, because bots and crawlers often ignore directives from robots.txt . There is an option to block using the SetEnvIfNoCase User-Agent command or via ReveriteCond. The first method is better. Well, for me personally.

In this list, I’ve collected exactly the bots I’ve fished out on my site. And the lockdown worked. In the logs you will see that the bots are given a server response 403, respectively, the load on your site will be reduced.

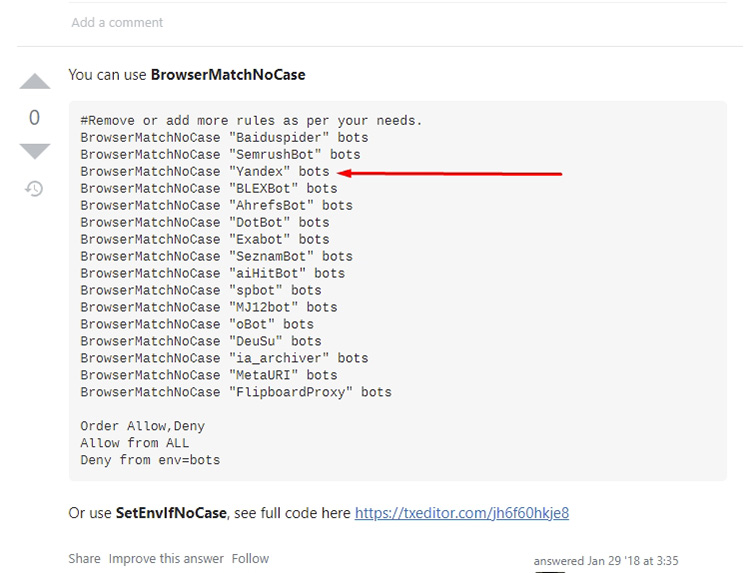

Keep in mind, many sites have similar lists for blocking bots and crowlers. I recommend that you do not mindlessly copy them, such directives are often made by people from abroad, respectively, there are mindlessly blocked crawlers Yandex and Mail.ru, which will have a bad effect on the positions of the site in Yandex.

A trivial code example:

And many content-makers from the CIS mindlessly copy such codes to their sites. So watch carefully and analyze.

In my variants, I have only published the bots that attack my site. There may be other robots and crawlers in your logs.

So, the option of locking through .htaccess. All bots will receive a server response of 403, i.e. “access denied”. This will seriously reduce the load on your site.

I use this code, it turned out to be quite workable:

#MJ12bot блокировать только в том случае, если не работаете с Majestic. Например, если работаете на биржах ссылок (Gogetlinks, Miralinks), то там данные Majestic очень важны и тогда лучше его не блокировать.

#Slurp - поисковой краулер Yahoo!, если ваш сайт ориентирован на зарубежную аудиторию и есть трафик с Яхуу, лучше не блокировать.

#FlipboardRSS и FlipboardProxy блокировать только в том случае, если не ориентированы на Flipboard

SetEnvIfNoCase User-Agent "Amazonbot|BlackWidow|AhrefsBot|BLEXBot|MBCrawler|YaK|niraiya\.com|megaindex\.ru|megaindex\.com|Megaindex|MJ12bot|SemrushBot|cloudfind|CriteoBot|GetIntent Crawler|SafeDNSBot|SeopultContentAnalyzer|serpstatbot|LinkpadBot|Slurp|DataForSeoBot|Rome Client|Scrapy|FlipboardRSS|FlipboardProxy|ZoominfoBot|SeznamBot|Seekport Crawler" blocked_bot

<Limit GET POST HEAD>

Order Allow,Deny

Allow from all

Deny from env=blocked_bot

</Limit>

Then you can see in the logs that the blocked bots get a 403 server response:

Accordingly, the bot will not be able to parse content, as well as download site data, such as rss feeds or other feeds.

There is another variant of the code, it may not work on all servers:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^Amazonbot [OR]

RewriteCond %{HTTP_USER_AGENT} ^BlackWidow [OR]

RewriteCond %{HTTP_USER_AGENT} ^AhrefsBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^BLEXBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^MBCrawler [OR]

RewriteCond %{HTTP_USER_AGENT} ^YaK [OR]

RewriteCond %{HTTP_USER_AGENT} ^niraiya\.com [OR]

RewriteCond %{HTTP_USER_AGENT} ^megaindex\.ru [OR]

RewriteCond %{HTTP_USER_AGENT} ^megaindex\.com [OR]

RewriteCond %{HTTP_USER_AGENT} ^Megaindex [OR]

#MJ12bot блокировать только в том случае, если не работаете с Majestic. Например, если работаете на биржах ссылок (Gogetlinks, Miralinks), то там данные Majestic очень важны и тогда лучше его не блокировать.

RewriteCond %{HTTP_USER_AGENT} ^MJ12bot [OR]

RewriteCond %{HTTP_USER_AGENT} ^SemrushBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^cloudfind [OR]

RewriteCond %{HTTP_USER_AGENT} ^CriteoBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^GetIntent Crawler [OR]

RewriteCond %{HTTP_USER_AGENT} ^SafeDNSBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^SeopultContentAnalyzer [OR]

RewriteCond %{HTTP_USER_AGENT} ^serpstatbot [OR]

RewriteCond %{HTTP_USER_AGENT} ^LinkpadBot [OR]

#Slurp - поисковой краулер Yahoo!, если ваш сайт ориентирован на зарубежную аудиторию и есть трафик с Яхуу, лучше не блокировать.

RewriteCond %{HTTP_USER_AGENT} ^Slurp [OR]

RewriteCond %{HTTP_USER_AGENT} ^DataForSeoBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^Rome Client [OR]

RewriteCond %{HTTP_USER_AGENT} ^Scrapy [OR]

#блокировать только в том случае, если не ориентированы на Flipboard

RewriteCond %{HTTP_USER_AGENT} ^FlipboardRSS [OR]

RewriteCond %{HTTP_USER_AGENT} ^FlipboardProxy [OR]

RewriteCond %{HTTP_USER_AGENT} ^ZoominfoBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^SeznamBot [OR]

RewriteCond %{HTTP_USER_AGENT} ^Seekport Crawler

RewriteRule ^.* - [F,L]

These options will only work with bots that identify themselves. If bots masquerade as other crawlers or pretend to be real visitors, these methods will not work.

You can ban them by IP, but more on that in a bit.

Blocking bots via robots.txt

Some bots can be banned through the robots.txt file. But it works only under one condition: if the robot or crawler does not ignore the directives written in this file. I’ve seen from my logs that many bots never even request this file, so they won’t even see the directives in it.

In general, the method is practically useless, sick of some bot? Ban at the server level, for example via.htaccess as suggested above. But I’ll show you. Will only work with robots and crowlers that identify themselves.

We write code like this:

User-agent: DataForSeoBot

Disallow: /

In general, we have forbidden a certain User-Agent from bypassing the site. But, as I said, the bot can simply ignore this directive.

We can also through the file robots.txt not only block the bot, but, for example, to specify the speed of bypass, so that it will continue to be on the site, but not load it heavily.

User-agent: SafeDNSBot

Disallow:

Crawl-delay: 3 # пауза в 3 секунды между запросами

Crawl-delay allows you to set a delay between requests, but this directive is considered deprecated, most bots ignore it, so its use does not seem reasonable.

In general, you cannot relyon robots.txt when blocking bots, as most of the robots simply ignore this file.

IP blocking of bots and crowlers

Sometimes, the logs contain very brazen bots that do not identify themselves, but generate a lot of load on the server. They can be protected from them either by some AntiDDoS-system, captcha and similar chips, or by IP blocking.

IP blocking is only appropriate when a particular bot sends many requests from a single IP address.

But, as a rule, this makes little sense, bots, as a rule, have a lot of IP in reserve, respectively, when discrediting one address, the bot will quickly appear from another.

But sometimes this option helps. But always check the IP before blocking, the most “brazen” IP in the logs may be your own. It is also possible to purely randomize the IP of Yandex or Google crawlers.

The code for blocking looks like this:

Order Allow,Deny

Allow from all

Deny from 149.56.12.131 85.26.235.123 185.154.14.138 5.188.48.188 88.99.194.48 47.75.44.156

Add each new IP to the list with a space.

Blocking bots will do your site good

A little bit will offload the server, and also the competitors will have less information about your site, so feel free to block. Instructions attached. Of course, if your site is heavily pounded by bots from different IP and do not identify themselves in any way, you will have to think about more serious methods of protection, but often in order to reduce the load on the server will be enough to those methods suggested above.

This is my farewell to you, I wish you success and also less harmful bots on the site!